Video:

Sunday, December 16, 2012

Snow Accumulation and Generation Final

Finally Done!!

Thanks to Patrick and Karl.

Video:

Code and Paper Link: https://github.com/DemiChen/SnowAccumulation

Video:

Friday, December 14, 2012

Sunday, December 9, 2012

Snow Accumulation Progress

I spent my weekend finding how to render to texture.

I encountered several problems, which are mostly due to my innocence.I found that I had never truly learnt about OpenGL before. Programming in OpenGL is really hard for me. There are so many features in the OpenGL. Often, to successfully render an image on the screen needs a lot of call for APIs. I was kind of lost and often forgot something, then I spent a lot of time finding those bugs. One problem was about the indices type. Meshes are deformed. Because the obj load source code use int type for indices. However, in OpenGL, there are only GL_UNSIGNED_BYTE and GL_UNSIGNED_SHORT.

Let me talk about what I have learnt this week. I learnt how to use the framebuffer of OpenGL. There are many attachments for a frame buffer, like color and depth. We could render both to buffers or texture objects. In my project, I use rendering to texture. In the glsl, we could directly write to the buffers, by specifying the out variables in shaders, also don't forget to give the out variables locations, so that the OpenGL know which variable write to which buffer.

Now I have a new problem. I don't know how to output two depth maps from just one shader. I input two transformation matrices and pass two position vecs into fragment shader .In the fragment shader, write them into output textures. Unfortunately, this method doesn't work..T T..

I encountered several problems, which are mostly due to my innocence.I found that I had never truly learnt about OpenGL before. Programming in OpenGL is really hard for me. There are so many features in the OpenGL. Often, to successfully render an image on the screen needs a lot of call for APIs. I was kind of lost and often forgot something, then I spent a lot of time finding those bugs. One problem was about the indices type. Meshes are deformed. Because the obj load source code use int type for indices. However, in OpenGL, there are only GL_UNSIGNED_BYTE and GL_UNSIGNED_SHORT.

Let me talk about what I have learnt this week. I learnt how to use the framebuffer of OpenGL. There are many attachments for a frame buffer, like color and depth. We could render both to buffers or texture objects. In my project, I use rendering to texture. In the glsl, we could directly write to the buffers, by specifying the out variables in shaders, also don't forget to give the out variables locations, so that the OpenGL know which variable write to which buffer.

Now I have a new problem. I don't know how to output two depth maps from just one shader. I input two transformation matrices and pass two position vecs into fragment shader .In the fragment shader, write them into output textures. Unfortunately, this method doesn't work..T T..

Monday, December 3, 2012

Falling Snow

Until now, I didn't get much progress. I only get the snow falling works partly.

The theory is simple. First generate random positions for snowflakes as well as scales. And then according to the depth of snow, calculate the alpha value of color. Essentially, a snowflake is just simple squares. What makes them look like snowflakes is the texture attached to the squares.

Although the method is simple, I encountered problems in the implementation. The biggest obstacle is that I am a beginner for three.js library. Due to the fact that the three.js library is not complete yet, some tutorials codes even cannot run on my machine. I have to spend much time to fix it. That is exact the reason my snowflakes are still squares. I don't know why I could attach texture to the squares, although I did exactly as the tutorial. For the animation, as usual, I do it in the vertex shader.

The theory is simple. First generate random positions for snowflakes as well as scales. And then according to the depth of snow, calculate the alpha value of color. Essentially, a snowflake is just simple squares. What makes them look like snowflakes is the texture attached to the squares.

Although the method is simple, I encountered problems in the implementation. The biggest obstacle is that I am a beginner for three.js library. Due to the fact that the three.js library is not complete yet, some tutorials codes even cannot run on my machine. I have to spend much time to fix it. That is exact the reason my snowflakes are still squares. I don't know why I could attach texture to the squares, although I did exactly as the tutorial. For the animation, as usual, I do it in the vertex shader.

Thursday, November 22, 2012

Snow Shading Kick Off

Finally, I choose to do snow shading for my final project. I like snow, it will be interesting to construct a snow white world. There are two parts in this project: snow accumulation and snow fall. After some research, I started to get some ideas for snow shading.

Snow Accumulation

Snows distribution differs for different terrains. This is determined by the height and gradient. I need to calculate the coverage of snow of terrains: terrains may be exposed to the snow or not, heights of accumulated snow also varies. How to calculate exposure? We could transform models into ortho view from the point of sky. And then use the depth to determine whether points are occluded. For the accumulation heights, use the normals to get them. While rendering the snow, we also need some noise to make snow Glisten.

Snow Fall

http://oos.moxiecode.com/js_webgl/snowfall/

This is the result I want.

First I need a function to generate snow in a 2D plane and give snow a random depth. Then determine the size and blur effects due to its depth. Maybe I could also design different shapes for snow.

Reference:

http://http.download.nvidia.com/developer/SDK/Individual_Samples/DEMOS/Direct3D9/src/SnowAccumulation/Docs/SnowAccumulation.pdf

http://jimbomania.com/software/snowfall.html

http://171.67.77.70/courses/cs448-01-spring/papers/fearing.pdf

Snow Accumulation

Snows distribution differs for different terrains. This is determined by the height and gradient. I need to calculate the coverage of snow of terrains: terrains may be exposed to the snow or not, heights of accumulated snow also varies. How to calculate exposure? We could transform models into ortho view from the point of sky. And then use the depth to determine whether points are occluded. For the accumulation heights, use the normals to get them. While rendering the snow, we also need some noise to make snow Glisten.

Snow Fall

http://oos.moxiecode.com/js_webgl/snowfall/

This is the result I want.

First I need a function to generate snow in a 2D plane and give snow a random depth. Then determine the size and blur effects due to its depth. Maybe I could also design different shapes for snow.

Hope my final project goes well!!

Reference:

http://http.download.nvidia.com/developer/SDK/Individual_Samples/DEMOS/Direct3D9/src/SnowAccumulation/Docs/SnowAccumulation.pdf

http://jimbomania.com/software/snowfall.html

http://171.67.77.70/courses/cs448-01-spring/papers/fearing.pdf

Wednesday, November 21, 2012

GLSL2

Here are some results from this project.

Feature implemented:

Something is not normal about the vertex pulsing, although I move vertices along normals. I believe the reason is that a single vertex have many normals in the buffer, so when I move vertices, it have many directions. As a result, the cow becomes explosive.

Feature implemented:

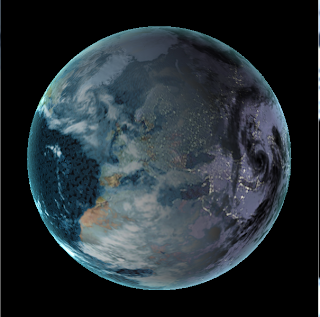

- Bump mapped terrain

- Rim lighting to simulate atmosphere

- Nighttime lights on the dark side of the globe

- Specular mapping

- Moving clouds

- water animation

- Space Screen Ambient Occlusion

- Vertex Morphing(Morphing between a sphere and the cow)(Press M/m button, N/n for pass shader)

- Vertex Pulsing(Press P/p button, N/n for pass shader)

Something is not normal about the vertex pulsing, although I move vertices along normals. I believe the reason is that a single vertex have many normals in the buffer, so when I move vertices, it have many directions. As a result, the cow becomes explosive.

|

| 12 * 12 Gird |

|

| 4 * 4 Grid |

Friday, November 9, 2012

GLSL Programming

filters:

* Image negative (3)

* Gaussian blur (4)

* Grayscale (5)

* Edge Detection (6)

* Toon shading (7)

* Pixelate (8)

* Contrast (9)

* Swirl (0)

Vertex Shader:

* A sin-wave based vertex shader:

* A simplex noise based vertex shader:

* Hat like vertex shader

Wednesday, November 7, 2012

Raterization_2

I fixed one problem, I wrongly derived the inverse matrix of eye perspective matrix. Looks much more normal now.

Add another mouse movement.

Alt + Middle for translation.

Add another mouse movement.

Alt + Middle for translation.

Tuesday, November 6, 2012

Rasterization

Graphics Pipeline stages included.

1. Vertex Shading

2. Primitives Assembly

3. Perspective Transformation

4. Scanline Raterization

5. Fragment Shading

6. Depth Buffer

7. Lambert Shading

8. Scissor Test

9. Blending

Finally, the Maya Style interactive camera.

Alt + Left Click to rotate the camera.

Alt + Middle to translate the camera.

Press s for scissor test effects. after press s then click drag mouse to select scissor area.

Press b for blending effects.

-

Friday, October 12, 2012

PathTracer

I spent a lot of time trying to add direct light. But the result is not good. Frustrated.

Features now

For the required features, I didn't have time to finish that by the deadline, although I just written the needed functions, and didn't debug them.

Optional features:

1. motion blur. I move the object according to time, and then blend those images together. The speed is changing with time.

2. Depth of field. Find the focus point, jitter the camera and then generate a new ray. The middle sphere is the focus. The near and further one become blur.

3. refraction. I use fresnel to calculate the reflection and refraction coefficient.

Limitations:The convergence speed is very slow.

For the required features, I didn't have time to finish that by the deadline, although I just written the needed functions, and didn't debug them.

Optional features:

1. motion blur. I move the object according to time, and then blend those images together. The speed is changing with time.

2. Depth of field. Find the focus point, jitter the camera and then generate a new ray. The middle sphere is the focus. The near and further one become blur.

3. refraction. I use fresnel to calculate the reflection and refraction coefficient.

Limitations:The convergence speed is very slow.

Path tracing 1

Problem now, slow convergence speed. I am not sure whether I should divide the rendering equation into two parts, direct illumination and indirect illumination. when I add direct illumination, the picture becomes very bright eventually, without the direct illumination, the convergence progress seems very slow. The picture with direct illumination is warmer.

Wednesday, October 3, 2012

Corrected GPU Raytracing

I today found that my horizontal direction seems inverse from the others....I reverse the direction.Besides, i misunderstood the speculation formula, which leaded me to believe that only when material was reflective, it would have specular effects. I also correct this. Here are new pictures. Little change, looks much better now!hoho. I also change some parameters. Next time, I will definitely start make my demo earlier.My demo is really ugly and hasty for this time..T T

I started to know that path-tracer is so powerful today. A lot of features could be down freely. Amazing

path tracing. HAHA. Looking forward to finishing the path tracer!!!

I started to know that path-tracer is so powerful today. A lot of features could be down freely. Amazing

path tracing. HAHA. Looking forward to finishing the path tracer!!!

Sunday, September 30, 2012

First Post on GPU Ray Tracing project

GPU Ray Tracing

GPU Ray Tracing is much more complicated than CPU. Annoying debug is one important reason. Another important factor is that I am not fully understand the underlying theories, especially that about refraction, BSDF. I am still trying to figure about how to blend the refraction ray and reflection ray together. Randomly choose one to follow? The result is not good, the image has many dark points and it is not as shinny as it deserves to be. Sign. If without a great framework, I cannot imagine how many years it would take me to finish such a project.

Now I am only add few features, camera interaction, normal map, texture map, specular and a half refraction..Admittedly, there still some little bugs in this part. But I am still working hard on it now.

GPU Ray Tracing is much more complicated than CPU. Annoying debug is one important reason. Another important factor is that I am not fully understand the underlying theories, especially that about refraction, BSDF. I am still trying to figure about how to blend the refraction ray and reflection ray together. Randomly choose one to follow? The result is not good, the image has many dark points and it is not as shinny as it deserves to be. Sign. If without a great framework, I cannot imagine how many years it would take me to finish such a project.

Tuesday, April 3, 2012

photon mapping

I research more details about the photon mapping.

First pass:

Photon Map

Use the basic steps for ray tracing. The difference is using Russian roulette to determine the possibility of absorbing, reflecting and refracting. For the reflecting, using BRFD model.and refraction with Snell's law. Global map: Trace from light. Use projection maps to generate photons. Different light has different strategy for generating photons.

Caustic map: This map need a large amount of photons.

Second Pass:

Rendering Image

1 Direct Illumination: a ray is traced from the point of intersection to each light source. As long as a ray does not intersect another object, the light source is used to calculate the direct illumination2. Indirect Illumination: Use global photon map to estimate.3. Caustic Illumination: Use the caustic map4. Specular Reflection: Do as the ray tracing.

Use Photon Map to estimate radiance

From what I have got,

Targets are as follows:

1. Russian roulette function

2.BRDF model

3. Snell's law

4. Tracing the photon map

5. Gathering

However, the biggest problem is still that I have no computer to do it!!!!!! Frustrating..

First pass:

Photon Map

Use the basic steps for ray tracing. The difference is using Russian roulette to determine the possibility of absorbing, reflecting and refracting. For the reflecting, using BRFD model.and refraction with Snell's law. Global map: Trace from light. Use projection maps to generate photons. Different light has different strategy for generating photons.

Caustic map: This map need a large amount of photons.

Second Pass:

Rendering Image

1 Direct Illumination: a ray is traced from the point of intersection to each light source. As long as a ray does not intersect another object, the light source is used to calculate the direct illumination2. Indirect Illumination: Use global photon map to estimate.3. Caustic Illumination: Use the caustic map4. Specular Reflection: Do as the ray tracing.

Use Photon Map to estimate radiance

From what I have got,

Targets are as follows:

1. Russian roulette function

2.BRDF model

3. Snell's law

4. Tracing the photon map

5. Gathering

However, the biggest problem is still that I have no computer to do it!!!!!! Frustrating..

Sunday, March 25, 2012

Modules of Basic Ray Tracing

Modules 1: Mesh Import. This part is done by CPU

Modules 2: Construct KD-Tree.This module is finished in CPU.

Modules 3: Main part. GPU is responsible for this module.

Basically each thread is responsible for one ray.

Step1, Generate rays.

Step2, find intersections. Kernel functions: 1. KD-Tree search. 2. KD-Restart 3. KD-BackTrack

Step3, calculate colors and reflected refracted rays

Then go to step 2.

One of the biggest problem is still how to reduce the recursion of module 3. Maybe create a big buffer for every iteration and then add them together. However, this is really memory-consuming.

Tuesday, March 20, 2012

Ray tracing

The ray tracing can be divided into two main sections:

1. Intersection algorithm

2. Trace

Section1:

This section is based on the paper "Real Time KD-Tree Construction on Graphics Hardware".

Input of this section is a triangle list.

When constructing a KD-tree, we should recursively split current node into two parts. The coordinate part is down in CPU and is the BFS of a tree.

The parallelism is exploited as such:

1. compute AABB for triangles in parallel.

2. Split nodes at the same level of a tree in parallel.

3.For larger nodes, Calculate the bounding box of each node in parallel. Divide triangles in each node into chunks, then all chunks in all nodes are processed parallel. Finishing the chunk bounding boxes, use the same method to get the bounding box for each node. This process uses the reduction algorithm.

4. For smaller nodes, it is really clever to use bit masks. I such manner, reduction algorithm find its place again.

Section 2:

Apparently, each pixel can be processed parallel. However, a big problem is the recursion in ray tracing. Now, I haven't started to work on this problem.

Problem to be solved:

1.How to import meshes into my program first. Maybe I can import meshed from Maya.

2. Figure out more details about the KD tree. Like SAH cost, spatial median splitting, empty space maximizing, how to incorporate reduction.

1. Intersection algorithm

2. Trace

Section1:

This section is based on the paper "Real Time KD-Tree Construction on Graphics Hardware".

Input of this section is a triangle list.

When constructing a KD-tree, we should recursively split current node into two parts. The coordinate part is down in CPU and is the BFS of a tree.

The parallelism is exploited as such:

1. compute AABB for triangles in parallel.

2. Split nodes at the same level of a tree in parallel.

3.For larger nodes, Calculate the bounding box of each node in parallel. Divide triangles in each node into chunks, then all chunks in all nodes are processed parallel. Finishing the chunk bounding boxes, use the same method to get the bounding box for each node. This process uses the reduction algorithm.

4. For smaller nodes, it is really clever to use bit masks. I such manner, reduction algorithm find its place again.

Section 2:

Apparently, each pixel can be processed parallel. However, a big problem is the recursion in ray tracing. Now, I haven't started to work on this problem.

Problem to be solved:

1.How to import meshes into my program first. Maybe I can import meshed from Maya.

2. Figure out more details about the KD tree. Like SAH cost, spatial median splitting, empty space maximizing, how to incorporate reduction.

Sunday, March 18, 2012

Whole Picture for Rendering

The work is much more than what I thought. I found some resources for my project.

1. "Real-Time KD-Tree Construction on Graphics"

2. "An Efficient GPU-based Approach for Interactive Global Illumination"

3. "Fast GPU Ray Tracing of Dynamic Meshes using Geometry Images"

This project can be divided into two parts: GPU Ray Tracing and GPU Photon Mapping. I am not confident that I can finish this project finally. But at least I should complete the Ray tracing part.

1. GPU Ray Tracing

It is responsible for direct illumination of the scene.

Actually, I did a CPU ray tracer last semester. But the effect is not good. There are some artifacts. I am not sure about whether improving my old work or modifying a open source ray tracer. To develop the ray tracing, I plan to combine the 1st paper and the 3rd paper.

2.GPU Photon Mapping

It is for the global illumination. First, construct the photon map. This part is not difficult. It is similar to the ray tracing and based on "Real-Time KD Tree Construction". The most consuming may be the final gathering process. Because computing the outgoing radiance needs a lot of estimating samples. One paper tries to reduce the number of photons and the effect is good. Unfortunately, I fail to understand the details of this method. So I decide to give up it.

In the following week. I will mainly focus on the Ray tracing part. Hope that I can make a great step.

Tuesday, March 13, 2012

Proposal for final project

Ocean Simulation and Render Proposal

Yuanhui Chen, Tao Lei

For the CIS565 final project, we are going to take ocean simulation and rendering. Yuanhui Chen, 1st year master student in CGGT, will mainly work on rendering part. Tao Lei, 1st year master student in EE, will focus on simulation. Fluid animation if popular in games, special effects. However, it is hard to get desired effects due to its computing complexity. So exploring the computing power of GPU becomes an effective solution.

We are going to use Smoothed Particles Hydrodynamics(SPH) method to do the ocean simulation. SPH's drawback over grid-based method is that it requires large number of particles to produce simulation of equivalent resolution. But, since we are using GPU, which is good at dealing with computation intensive tasks, such drawback should be no longer exist. Since SPH method is inherently parallelism, less data dependent, which is perfect to be implement on GPU. Although physically based fluids animation has historically been the domain of high-quality offline rendering due to great computational cost(GPU Gem Chap 30.1 pg633), we are going to simulate it in real-time.

For the rendering part, we will try several methods then determine which one to implement at last. First option is to use marching method to generate isosurface from density field and then use volume rendering to visualize isosurface. The marching method is based on a paper "Using the CPU programmable Geometry Pipeline". It combines the marching cubes and tetrahedra. The second option is photon mapping. Photon mapping is a good choice for add refractions, reflection and global illumination, however it is expensive, especially when we need to change the view frequently.

We also plan to add interactions:interaction between ocean and coast, interaction between water and floating objects. We refer "Animating the Interplay Between Rigid Bodies and Fluid". It developments the SPH model by adding rigid body forces and enforcing rigid body motion. We will also take the wind effects into consideration.

Although this project is ocean simulation and rendering, we are not going to confine our work in ocean. We may also cover cloud and terrain simulation and rendering in our work to make our picture rich and full.

Video:

http://www.youtube.com/watch?v=oNPJKBjuHIY (ocean simulation)

http://www.youtube.com/watch?v=d818Bjef6Yc (ocean render)

Subscribe to:

Posts (Atom)